A large-scale language model (LLM) is a language model built using massive amounts of text data and deep learning techniques. Compared to conventional language models, the following three elements have been significantly increased:

- Computational complexity : More sophisticated processing is possible, allowing you to handle more complex tasks.

- More data : More language patterns can be learned, resulting in more natural, human-like sentences.

- Number of parameters : This makes the model more accurate and more versatile.

These features enable LLMs to perform well in a variety of natural language processing (NLP) tasks.

How does the LLM work?

1. Transformer Architecture

The neural network architecture underlying LLM is called Transformer . Transformer was developed by Google AI in 2017 and has the following advantages over traditional Recurrent Neural Network (RNN) architectures:

- Handling long-distance dependencies : Transformer is well-suited for understanding and generating long texts because it can efficiently handle relationships across entire sentences.

- Parallel processing : Transformer can perform calculations in parallel, making it faster.

- Scalability : The Transformer is well suited for implementing LLM because it can train on large datasets.

The Transformer consists of two main components : the encoder and the decoder .

- Encoder : Processes the input sentence and produces vectors that represent the relationships between words.

- Decoder : Based on the vectors output by the encoder, it predicts the next word and generates a sentence.

The encoder and decoder learn relationships across sentences using a mechanism called self-attention , which calculates how each word relates to other words and helps understand the context.

2. Huge amount of training data

LLM is trained using huge amounts of text data. Training data includes a variety of texts such as books, articles, news, websites, etc. The more data there is, the more language patterns LLM can learn and the more natural, human-like the text it generates.

In recent years, techniques that combine both supervised and self-supervised learning to learn LLMs have become mainstream.

- Supervised learning : Training a model using data with correct labels.

- Self-supervised learning : Using unlabeled data, the model learns to estimate labels on its own.

Combining these learning methods allows LLM to learn from more data and become more accurate.

3. Advanced deep learning technology

LLMs are trained using advanced optimization algorithms such as Adam and RMSprop , and regularization techniques such as Dropout and L2 regularization , which help improve the accuracy of the model and reduce overfitting.

LLMs can also be trained using specialized hardware such as Tensor Processing Units ( TPUs ), which can perform computations faster than traditional CPUs or GPUs, significantly reducing the training time of LLMs.

LLM Business Applications Area

LLM is used in a variety of business fields. The main examples of its use are as follows:

- Customer service : Automating customer interactions with chatbots and voice recognition systems

- Content Creation : Auto-generate ad copy, articles, blog posts, etc.

- Translation : Translating between languages

- Search : Improved search results

- Marketing : Advertisement tailored to customer needs

- Development : Software code generation

In addition to these use cases, LLM has the potential to be applied in a wide range of fields.

Trivia: Services that use LLM

LLM is already being used in a variety of services, such as Google Translate and Amazon Alexa, which use voice recognition systems.

LLM Challenges

Although the LLM is a very powerful tool, it does come with some challenges.

- Bias : LLM may reflect bias in the training data.

- Ethics : LLM produces content that may be ethically questionable.

- Accountability : It can be difficult to explain the basis for an LLM decision.

To overcome these challenges, it is necessary to develop ethical guidelines for the development and use of LLMs.

The future of LLM

It is expected that LLM will continue to develop in the future. As research and development progresses, LLM may become more sophisticated and be able to communicate more naturally with humans. LLM also has the potential to create new business models and services.

LLMs will likely become an increasingly integral part of our lives in the future.

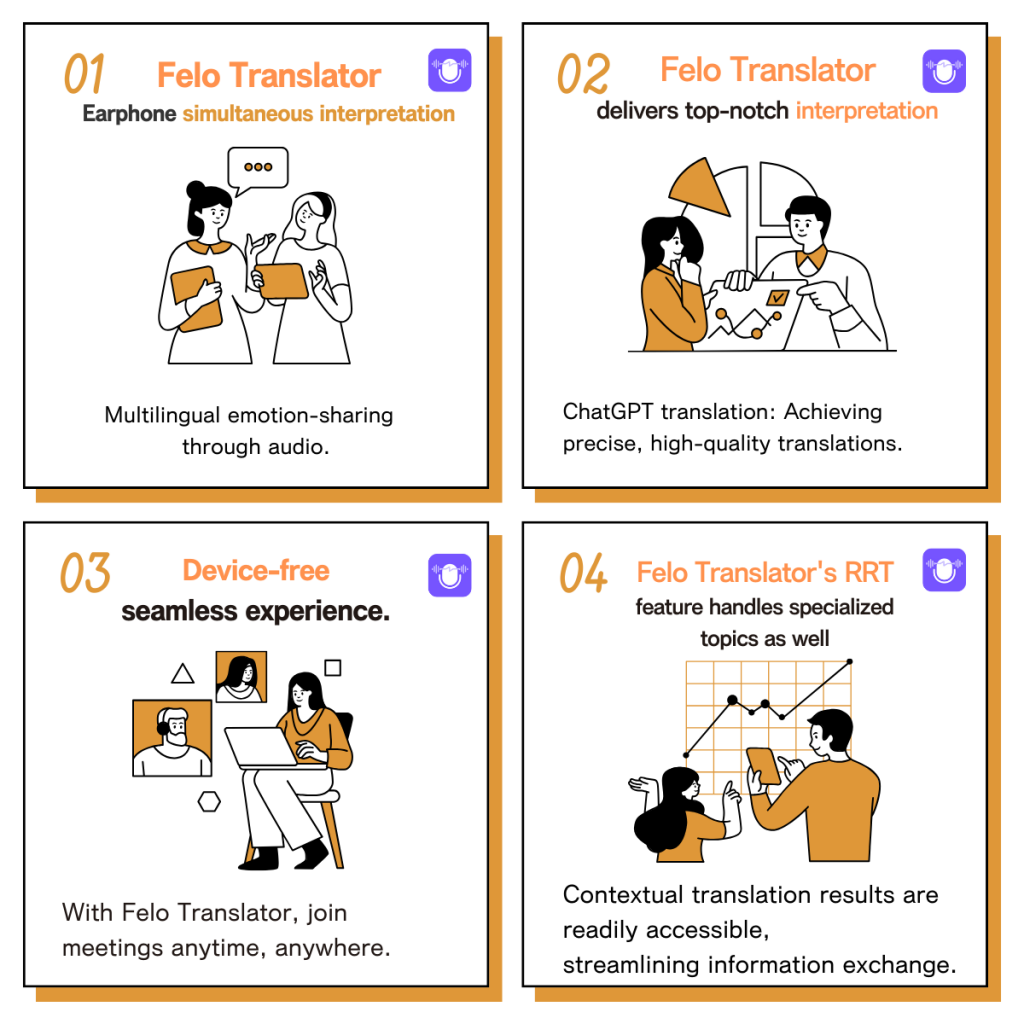

Efficient and accurate real-time translator tools to improve your work — Felo Translator

What is Felo Translator ?

Felo Translator is an AI simultaneous interpretation app equipped with GPT-4 engine and RRT technology.

Quickly and accurately translate audio from over 15 foreign languages including English, Spanish, French, German, Russian, Chinese, Arabic, and Japanese. Supports downloading of original and translated texts to help you learn accurate expressions and pronunciation.

As a large-scale language model, ChatGPT accurately conveys the passion, expression, and dramatic effect of the stage, allowing audiences to fully understand and enjoy the excitement brought by different linguistic cultures.\

How can Felo Translator assist simultaneous interpreters?

Felo Translator supports beginners in simultaneous interpretation and solves problems by leaving no notes behind and more accurately translating technical terms.

Simultaneous interpretation is a complex and highly technical task, requiring interpreters to have solid language skills, a wealth of specialized knowledge, and a good sense of teamwork. Only continuous learning and improvement of one’s translation skills can make one qualified for this important translation task and contribute to the smooth progress of international communication.

iOS Download | Android Download

Relevant Note👇

【2024】Top 12 Recommended Schedule Sharing Calendar Apps for Efficiency Boost

Top 10 Attendance Apps Recommended in 2024

What is ”Summary”? Introducing recommended summarization AI tools and summarization tips

What is evidence? Do you know its meanings in various industries?